Hello people,

First time user here. After messing around with SP107/SP108’s I have decided to look into some real LED control and have ordered a few PB V3’s. I feel like going directly down the Arduino route would be a pretty steep learning curve and PB seems like a happy medium.

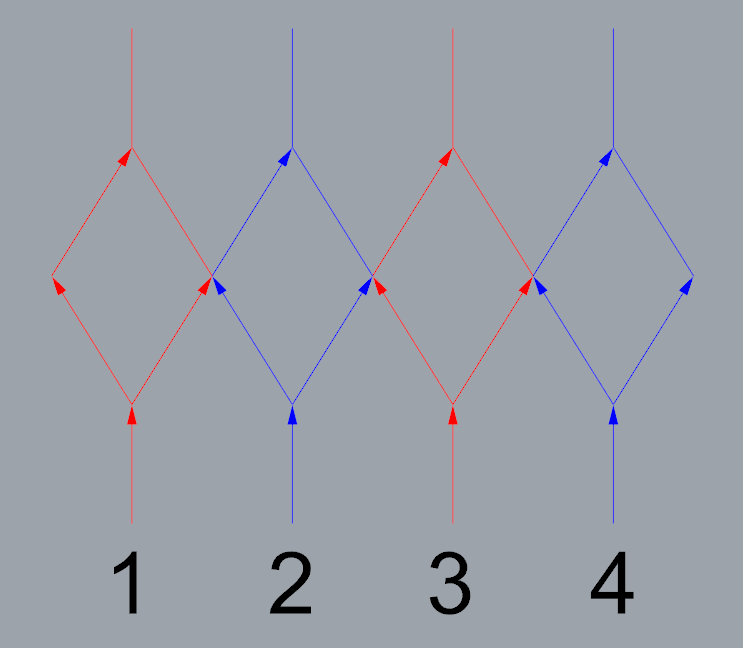

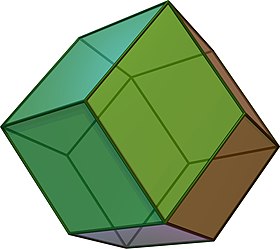

My project is an infinity mirror rhombic dodecahedron, using WS2812b’s (60 p/m) which I intend on bumping up to higher densities in versions to follow. Currently it is using a single data input, mirrored 4 ways with 1 fork or split per line. Colours on the diagram are for clarity. I know that swapping to a mapped system requires a completely different strip configuration and plan on tweaking my design to suit.

A couple clips running on a Sp107e.

Yes, I have seen the Adam Savage one day build. I will be using a similar if not exact copy of the wiring scheme they ended up using. I am open to any ideas on this too.

My questions are:

-

How would I go about mapping this object? Will I be manually plotting pixels or vertices/edges? (I can use my cad files to get x,y,z point data for each pixel in sequence).

-

Would changing pixel density require complete re-mapping or is that an adjustable variable?

-

How difficult is it to adjust premade effects to work on a custom 3D map? will I be making them from scratch every time? I feel like my current LED sequence would give better ‘plug and play’ results with 1D effects but taking the time to map it correctly would be unmatched.

Im sure having the PB unit and software in hand will help with understanding how to move forward, I just wanted to get an idea of what I’ll be doing so that I can hit the ground running once everything turns up.

Thanks in advance.

(Edited for formatting)