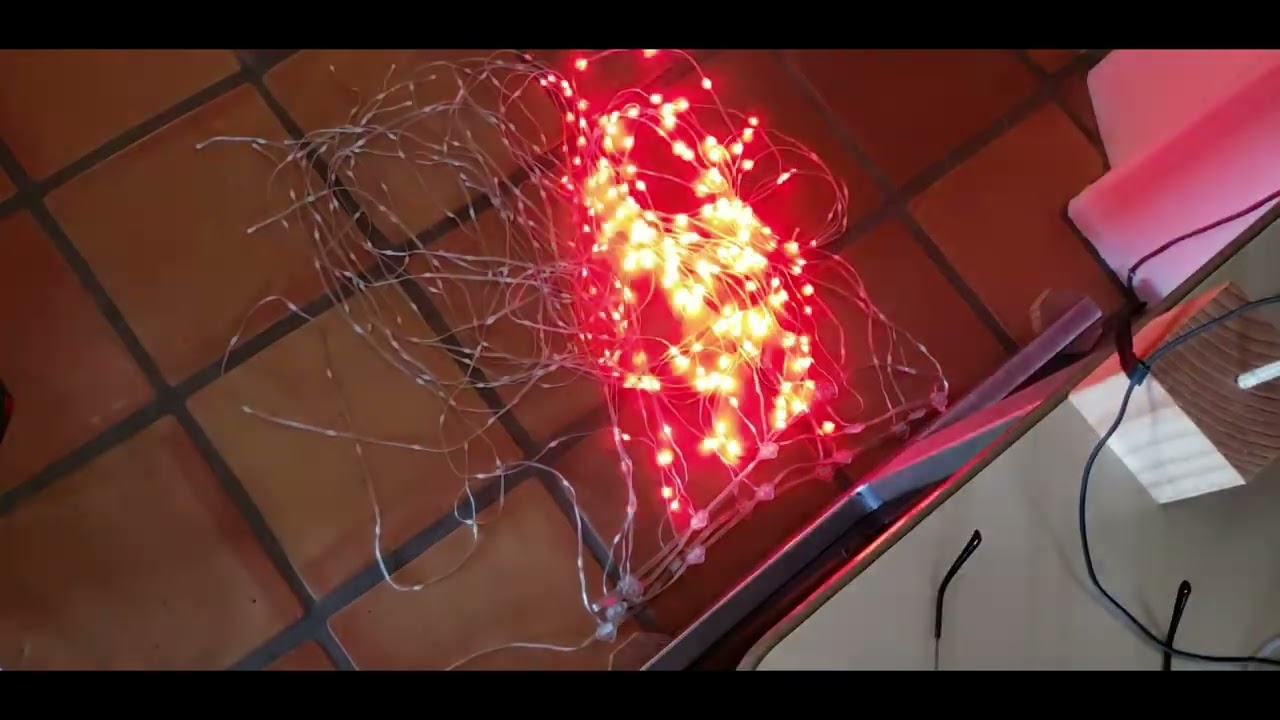

Here’s a first look at my automatic 2D map generator. I’ve got a few features to add before general release, like map rotation and the ability to manually edit points the vision engine can’t figure out, but it’s already pretty robust. Should be ready to try in a week or two!

You did it!!! First boss defeated!

This is super cool. When this is 3D and done via a mobile phone (perhaps with the help of the spatial awareness inferences in ARKit - scene understanding, ARAnchor/ARNode and hit testing) - oh my god you’ll have a utility app that everyone working with LEDs will need to have. Holy grail. I have several links saved to other people’s prior attempts on LAA and YouTube!

What. The. F.

This is absolutely insane. Impressive work ! I actually have an idea for an art piece where this would prove once again Pixelblaze is the right choice to power it !

Wow! Thats amazing! Do I see Python in the background? Are you using a CV library, and websocket to poke each pixel?

Very very cool!

This might be the most exciting new feature I’ve seen in a long, long time. It’d be interesting to see how it responds to light diffusion (aka a piece of white cloth) over the LEDs when you’re done with it – because that’d allow mapping of LEDs installed into wearables or other items already. So impressively cool already.

@wizard, yes, I’m using the python client with OpenCV. It’s super handy to be able to control the Pixelblaze while taking images.

A couple of things I found while researching this:

- When you light up an LED, you actually have to throw away the next 10 or so frames while the camera tries to “help” you – adjusting exposure, white balance, etc. This takes time. When looking at other people’s detectors, I saw a lot of weird lighting and sampling problems that I think were related to this sort of issue.

- Huge cheat #1: The Pixelblaze told me how many pixels it has, and I know exactly which one I’m trying to light up. I know the pixel is there. If I don’t get a good detection I can adjust parameters and automatically go back and try again.

@ZacharyRD, I’m working on diffusion now. I think paper and cloth – anything thin enough to let you distinguish the LEDs when they’re running fairly bright – will work. Complete diffusion over a large surface, maybe not though.

“Hard” reflection off mirrored surfaces is a bear too. Infinity objects are right out for the moment.

I mean, complete diffusion over a large surface seems almost impossible – I think you’re solving at the right level – through thin diffusion where you can still visually distinguish individual LEDs seems enough! Thanks.

Incredible, and relevant to my interests—“why can’t the computer do this?” I remember thinking as I clicked on all 400 LEDs in an image when I did this last time with @wizard ’s tool.

Do you have a “quantise” or “snap to grid” feature? Useful for wearables like mine which are theoretically regular arrangements but might not look so to any particular camera angle, given how the fabric lays.

I’ll have some kind of quantization for sure. Not sure about explicit snap-to-grid. Kinda… there is no grid. I’m trying hard not to be picky about lighting, camera angle, moderate amounts of camera movement.

Right now, the plan is this: it’ll tag the pixels, then you’ll be able to rotate the whole map to the angle you want, drag misbehaving pixels to where they should be, and send the map to the Pixelblaze, or export it as JSON or CSV so you can work on it further.

HI Any updates on this project? Cant wait to try.

I think you can set the exposure parameters directly in opencv so that autoexposure is off.

I’m still working on it. It’s on the way to becoming a phone app.

I found pretty quickly that the LED objects people wanted to map (like large sculptural things, and long LED strip arrangements) were too big or too geometrically inconvenient to fit in a single webcam frame.

To do the job right, you need to be able to walk around and keep the camera pointed at the active portion of the object, while the software keeps track of the physical coordinates of the whole thing.

So the phone, with its really nice camera and large assortment of position sensors, is really the only logical place for this to live.

At the moment things are little slow. I’m using this as an excuse to finally learn iOS development, and the early learning curve is steep. (Lots of help and examples for people writing simple “display something from a cloud database” apps, not so much for people who want to do a whole bunch of local processing with hardware assistance.)

Are you still mapping pixels one at a time?

Yes, and likely will for the foreseeable future. It can go quite quickly, and the user will be able to set the speed.

My thinking is that if you’re working with a big object it’s easier to keep the camera pointed at roughly the right area if we’re simply going through the pixels in order. And doing the blinky encoder thing, or even encoding with multiple colors is way trickier to make reliable in real-world conditions than a simple “Where is the lit LED in this frame?” model.

You don’t think some form of binary encoding or similar would work?

Maybe someday. To do a bunch of pixels at once, you’d want to make a video of the whole thing, then feed it to a server somewhere for analysis. I’ll probably need to do this eventually because down the road I want to do full 3D mapping, which means generating a 3D scene model from multiple images. That requires a pretty hefty computer on the back end at the moment.

For v1 though, I’m sticking to something simple that will run locally on a reasonably recent phone.

Where are you up to with this? Just finishing up another two panels worth of LEDs on my jacket, so something like 700 pixels total now, and not looking forward to doing the clicky-clicky on each of them to do the mapping. Just the front, so effectively 2d and will fit in one frame. Would love to help test it out for you!

Mind sharing? Just in case @zranger1 hasn’t been able to make any progress.

The phone app is going to take a while because… I have to learn to write a phone app!

In the meantime, I’ve cleaned up the source for the last “experimental” OpenCV/Python version before I decided to go mobile. If you’d like to try it out, it’s in the github repository below:

(And if you do try it, please let me know how it goes!)