first device is pixelblaze pro, 2nd one is pixelblaze pico, both running latest v3.66

I didn’t find any docs on how the lead follow works, so I just used the first to take the 2nd as follower.

I realize the hardware is different, but both are still ESP32 running at the fasted 240Mhz speed in settings.

Both have 1800 pixels (30x60) and are on nodeid 0.

I left my first mapper at

function (pixelCount) {

width = 60

var map = []

for (i = 0; i < pixelCount; i++) {

y = i / width

x = i % width

map.push([y, x])

}

return map

}

Without docs, I guessed that I had to offset coordinates on the 2nd one like this: map.push([y+30, x]) (only line I changed).

the results are bad in 2 ways, though:

-

the eyes coordinates are wrong and offset half way on both devices

-

there is an unbearable 0.5sec delay between the 2 devices, so the eyes open/close at different times, ruining the entire effect.

Both are on the same wifi, one IP address off from one another.

Any ideas what I’m doing wrong?

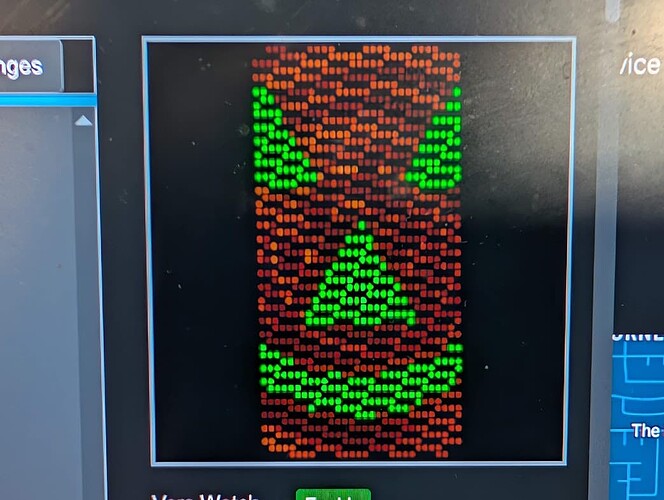

Display is cut in half, 30+30, and looks like this with 2 devices

When I plug everything back as a single chain of 3600 on a single device, then it goes back to working

I tried another pattern and it’s the same problem, both patterns think the display is 30 pixels wide instead of 60 pixels wide and mis-render as a result. Basically they are not aware of the 2nd device, and somehow the same display gets output a 2nd time with a 0.5s delay on the 2nd device