Sadly, the frequency buckets aren’t really good at specifically detecting bass sound, as the higher buckets tend to be sensitive, and the lower buckets not. You can isolate high noises to a bucket, but the low sounds tend to have some resonance in higher frequency buckets.

I’ve been playing with this, using tools like

https://www.szynalski.com/tone-generator/

and https://drumbit.app

I think you’re on the right track, looking at lots of buckets, and looking for some energy signature, like all buckets have some, which tends to be lower sounds like the bass beat.

Quick and dirty code I was using for testing:

Rough attempt to just display brightness relative to frequency use on 1d line of lights

export var frequencyData

export var energyAverage

export var maxFrequencyMagnitude

export var maxFrequency

export var gain = 1

export function sliderGain(v){

gain = v * 4

}

export var buckets = array(32)

function bucketUpdate(v,i,a){

l = frequencyData[i] - ((0.001+energyAverage)*gain)

if (l < 0) { l = 0}

return l * 1000

}

export function beforeRender(delta) {

buckets.mutate(bucketUpdate)

}

export function render(index) {

h = index/pixelCount

s = 1

v = buckets[index%32]

hsv(h, s, v)

}

I copied the frequency items to a new bucket array, so I could play with adjusting values (like reducing all per the energyAverage, and using s gain slider (which is what’s the example is), etc, and do math once per render loop.

With the tone generator, you usually can’t get low buckets, while your voice can, for example.

Some beats are around bucket 7-9, so likely that’s the key buckets, but then again, bass tends to add values to many buckets so it’s likely your approach of looking at a mass of buckets at once might be better.

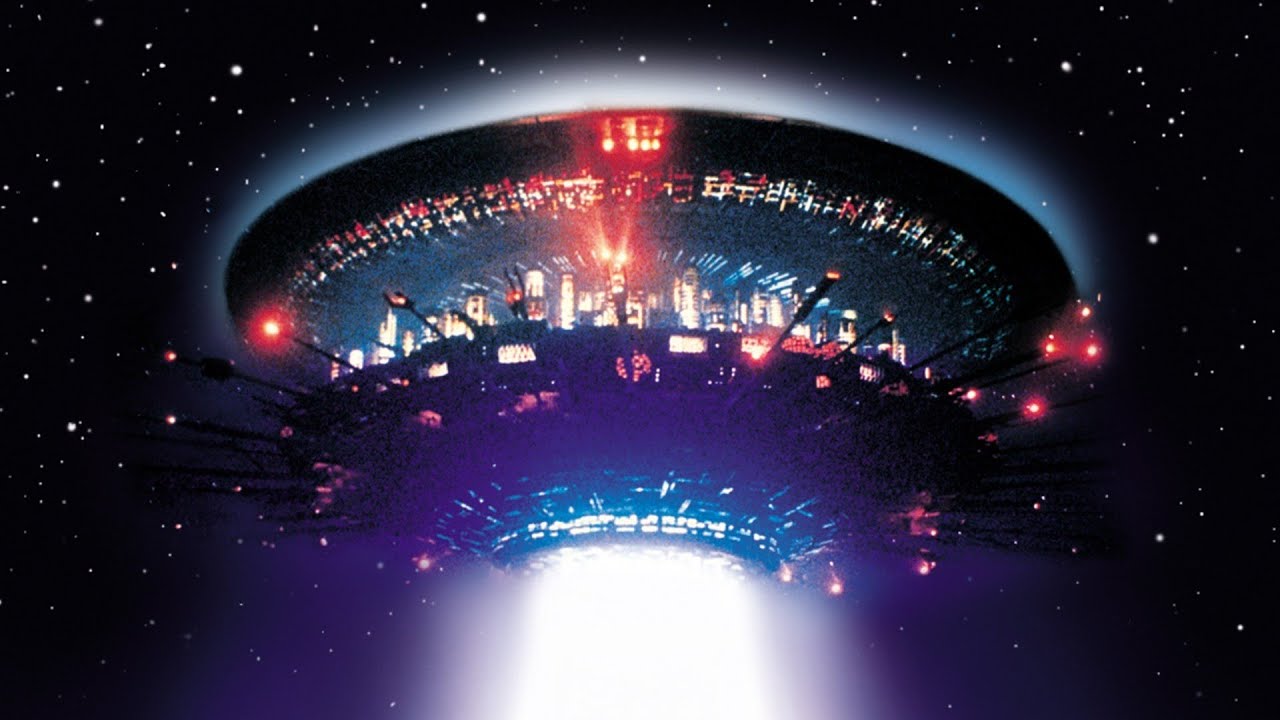

Obligatory:

ooh, I found an mp3

https://www.televisiontunes.com/uploads/audio/Close%20Encounters%20of%20the%20Third%20Kind%20-%20Wild%20Signals.mp3 (thanks to archive.org)

With my example, you can see how much of the low bucket activity is clump-y, while the high noises are distinct. Of the ‘5 tones’, when they drop an octave, I get no bucket at all.

Ideally, I want this particular set of sounds to end up making far more of a range of lights display than the way the low buckets light up all at once. I don’t think it’s possible with the sound board as is though.