@Scruffynerf ,

You mean make each PB do the work of the entire combined rendering? One of the advantages of a multi-PB system is increased rendering throughput over a single large PB install with output expander ports.

True, some patterns like KITT may be much harder to render small portions of, but I think most of them would be better off with a partial render. I think the virtualized KITT could still render a single “swoosh” that render draws from, but perhaps the leader location should be based on time() instead of accumulation of direction * delta.

I like the map idea, include the whole thing in the mapper just put the “local” pixels first. This would let you see the big picture too.

How about for index based patterns? Most use index and pixelCount to get a ratio, so tweaking those could do the trick, except where patterns use the index 1:1 with an array like KITT and blinkfade. Still, I think it could make a good first pass for most patterns.

Let’s say a pattern uses index/pixelCount - this results in a number between 0 and nearly 1.0 at the far end. If we wanted to split that across 3 PBs in 3rds, we could just scale it down to 33%, and then offset where it is.

So on the first PB, we’d swap that out with ((1/3) * index / pixelCount). Now we have a number between 0 and .333, roughly the first 1/3rd of the animation.

For the second PB, we take that and “move” it to the right by 1/3rd: ((1/3) * index/pixelCount + 1/3).

And likewise for the last one, still scaled to 1/3rd but offset 2/3rds: ((1/3) * index/pixelCount + 2/3).

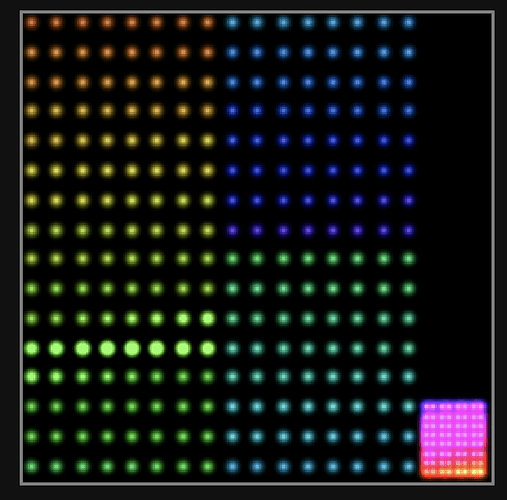

I think 2D and 3D will be easier since they work in world units instead of pixels. For example to change a render2D where you had 3 PBs in the same kind of arrangement:

localScale = 1/3

localOffset = 0/3 // for the first segment. 1/3 for the 2nd. 2/3 for the 3rd

export function render2D(index, x, y) {

x = (x*localScale) + localOffset

}

I think maybe converting render to a render1D would be better perhaps. Swap out all instances of index/pixelCount with x in a render1D(index, x), then the coordinate math is similar to 2D/3D since you are working in “world units” again.

export function render(index) {

render1D(index, index/pixelCount)

}

localScale = 1/3

localOffset = 0/3 // for the first segment. 1/3 for the 2nd. 2/3 for the 3rd

export function render1D(index, x) {

x = (x*localScale) + localOffset

}